The groundwork of any secure system installation is a strong authentication. It is the process of verifying the identity of a user by comparing known factors. Factors can be:

- Shared Knowledge

A password or the answer to a question. It’s the most common and not seldom the only factor used by computer systems today. - Biometric Attributes

For example fingerprints or iris pattern - Items One Possess

A Smart Card or phone. Phone is probably one of the most common factors in use today aside a shared knowledge.

A system that takes more than one factor into account for authentication is also know as a multi-factor authentication system. Knowing the identity of a user up to a specific certainty can not be overestimated.

All other components of a save environment, like Authorization, Audit, Data Protection, and Administration, heavily rely on a strong authentication. Authorization or Auditing only make sense if the identity of a user can not be compromised. In Hadoop today there exist solution for nearly all aspects of enterprise grade security layers, especially with the event of Apache Argus.

They all start with implementing a strong authentication using Kerberos. Concerning Kerberos with Hadoop there is no real choice as one is either left with no authentication (simple) or Kerberos. The other real solution would be to disallow any access to the cluster only within a DMZ using Knox gateway.

Not only since Microsoft integrated Kerberos as part of Active Directory it can be seen as the most widely used authentication protocol used today. Commonly when speaking of Kerberos today people refer to Kerberos5 which was published in 1993.

From here we’ll go ahead an configure a Hadoop installation with Kerberos. As an example we are going to use Hortonworks Sandbox together with Ambari.

Setting up Kerberos on CentOS

Before we get started we’ll setting up a kerberized environment we would need to install Kerberos on the sandbox VM. In addition we also need to create a security realm of which to use during setup.

Installing Kerberos on CentOS:

$ yum -y install krb5-server krb5-libs krb5-workstation

Creating our realm:

$ cat /etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = MYCORP.NET

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

MYCORP.NET = {

kdc = sandbox.mycorp.net

admin_server = sandbox.mycorp.net

}

[domain_realm]

.mycorp.net = MYCORP.NET

mycorp.net = MYCORP.NET

# cat /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

MYCORP.NET = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

Before you proceed according to this configuration it is a good idea to already add sandbox.mycorp.net to your /etc/hosts file, as well as changing the ACL configuration for krb5 (/var/kerberos/krb5kdc/kadm5.acl) to contain the following:

$ cat /var/kerberos/krb5kdc/kadm5.acl */admin@MYCORP.NET *

Now we are ready to be creating our Kerberos database:

$ kdb5_util create -s Loading random data Initializing database '/var/kerberos/krb5kdc/principal' for realm 'MYCORP.NET', master key name 'K/M@MYCORP.NET' You will be prompted for the database Master Password. It is important that you NOT FORGET this password. Enter KDC database master key: Re-enter KDC database master key to verify:

After that we can start kadmin and create a administrative user according to the ACL we defined earlier:

$ service kadmin start $ kadmin.local -q "addprinc sandbox/admin" Authenticating as principal root/admin@MYCORP.NET with password. WARNING: no policy specified for sandbox/admin@MYCORP.NET; defaulting to no policy Enter password for principal "sandbox/admin@MYCORP.NET": Re-enter password for principal "sandbox/admin@MYCORP.NET": Principal "admin/sandbox@MYCORP.NET" created.

We can now start Kerberos service krb5kdc and test our administrative user:

$ service krb5kdc start

$ klist

klist: No credentials cache found (ticket cache FILE:/tmp/krb5cc_0)

$ kinit sandbox/admin

Password for sandbox/admin@MYCORP.NET:

$ klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: sandbox/admin@MYCORP.NET

Valid starting Expires Service principal

10/05/14 13:30:12 10/06/14 13:30:12 krbtgt/MYCORP.NET@MYCORP.NET

renew until 10/05/14 13:30:12

$ kdestroy

$ klist

klist: No credentials cache found (ticket cache FILE:/tmp/krb5cc_0)

Kerberizing Hadoop with Ambari

UPDATE:

Read here for using the Ambari security wizard since Ambari 2.X to kerberize a HDP cluster with exiting KDC.

Ambari gives us a smooth wizard we can follow in order to kerberize our Hadoop installation. Go to Ambari Admin an follow the Security menu and the provided enabling process.

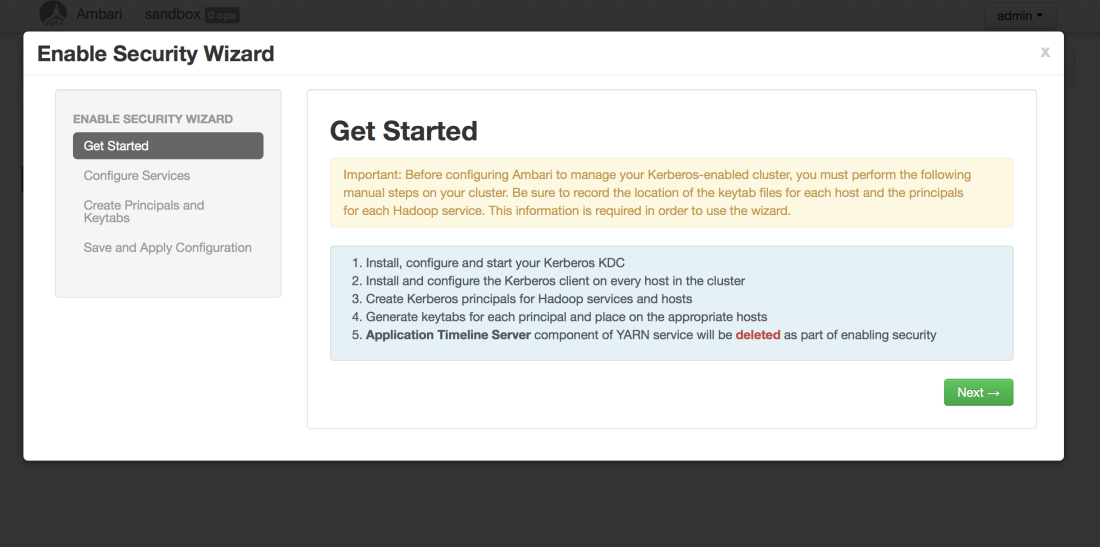

By clicking on the “Enable Security” button you will start the wizard:

By clicking on the “Enable Security” button you will start the wizard:

You can then use Ambari to configure the realm for the required keytabs that we’ll be creating throughout the rest of this process.

You can then use Ambari to configure the realm for the required keytabs that we’ll be creating throughout the rest of this process.

This will create a CSV file that we can download and use for generating a keytab creation script. First download the CSV file as follows:

This will create a CSV file that we can download and use for generating a keytab creation script. First download the CSV file as follows:

Move the downloaded CSV file to your sandbox using scp. Ambari provides us with a script we can use, which will generate the keytabs based on the CSV file we previously created.

Move the downloaded CSV file to your sandbox using scp. Ambari provides us with a script we can use, which will generate the keytabs based on the CSV file we previously created.

$ scp -P 2222 ~/Downloads/host-principal-keytab-list.csv root@localhost: $ /var/lib/ambari-server/resources/scripts/keytabs.sh host-principal-keytab-list.csv > gen_keytabs.sh $ chmod u+x gen_keytabs.sh $ ./gen_keytabs.sh

This should create you the needed keytabs to run Hadoop services in a kerberized environment. The keytabs are made available in the keytabs_sandbox.hortonworks.com folder or as a tar archive keytabs_sandbox.hortonworks.com.tar.

Unfortunately is the keytabs.sh script not complete. In order to crate also a keytab for the ResourceManager of YARN, go into the gen_keytabs.sh script and copy for example the line kadmin.local -q “addprinc -randkey oozie/sandbox.hortonworks.com@MYCORP.NET” and change oozie to rm .

Make sure you move the keytabs to /etc/security/keytabs and set the permissions accordingly:

$ chown hdfs. /etc/security/keytabs/dn.service.keytab $ chown falcon. /etc/security/keytabs/falcon.service.keytab $ chown hbase. /etc/security/keytabs/hbase.headless.keytab $ chown hbase. /etc/security/keytabs/hbase.service.keytab $ chown hdfs. /etc/security/keytabs/hdfs.headless.keytab $ chown hive. /etc/security/keytabs/hive.service.keytab $ chown mapred. /etc/security/keytabs/jhs.service.keytab $ chown nagios. /etc/security/keytabs/nagios.service.keytab $ chown yarn. /etc/security/keytabs/rm.service.keytab $ chown hdfs. /etc/security/keytabs/nn.service.keytab $ chown oozie. /etc/security/keytabs/oozie.service.keytab $ chown yarn. /etc/security/keytabs/nm.service.keytab $ chown ambari-qa. /etc/security/keytabs/smokeuser.headless.keytab $ chown root:hadoop /etc/security/keytabs/spnego.service.keytab $ chown storm. /etc/security/keytabs/storm.service.keytab $ chown zookeeper. /etc/security/keytabs/zk.service.keytab $ ll /etc/security/keytabs total 64 -r-------- 1 hdfs hadoop 466 Oct 5 13:56 dn.service.keytab -r-------- 1 falcon hadoop 490 Oct 5 13:56 falcon.service.keytab -r--r----- 1 hbase hadoop 334 Oct 5 13:56 hbase.headless.keytab -r-------- 1 hbase hadoop 484 Oct 5 13:56 hbase.service.keytab -r--r----- 1 hdfs hadoop 328 Oct 5 13:56 hdfs.headless.keytab -r-------- 1 hive hadoop 478 Oct 5 13:56 hive.service.keytab -r-------- 1 mapred hadoop 472 Oct 5 13:56 jhs.service.keytab -r-------- 1 nagios nagios 490 Oct 5 13:56 nagios.service.keytab -r-------- 1 yarn hadoop 466 Oct 5 13:56 nm.service.keytab -r-------- 1 hdfs hadoop 466 Oct 5 13:56 nn.service.keytab -r-------- 1 oozie hadoop 484 Oct 5 13:56 oozie.service.keytab -r-------- 1 yarn hadoop 466 Oct 5 13:56 rm.service.keytab -r--r----- 1 ambari-qa hadoop 358 Oct 5 13:56 smokeuser.headless.keytab -r--r----- 1 root hadoop 3810 Oct 5 13:56 spnego.service.keytab -r-------- 1 storm hadoop 484 Oct 5 13:56 storm.service.keytab -r-------- 1 zookeeper hadoop 508 Oct 5 13:56 zk.service.keytab

We are now ready to have Ambari restart the services while applying the Kerberos configuration. This should bring everything back up in a kerberized enviornment.

Further Readings

Further Readings

- Hadoop Security Design (2009 White Paper)

- Hadoop Security Design? – Just Add Kerberos? Really? (Black Hat 2010)

- Hadoop: The Definitive Guide (Amazon)

- Hadoop Operations (Amazon)

Kerberized Hadoop Cluster – A Sandbox Example http://t.co/3Dsu3hWSkE

LikeLike

Von @jonbros: Kerberized Hadoop Cluster – A Sandbox Example http://t.co/HgQJhDR3Ft #IronBloggerMUC

LikeLike

Best blog for ambari+kerberos …..this tutorial cleared lot of doubts .Im not able to start service timeline server,it fails at 35 percent, any Idea—————-error is as follows—–>

2014-10-31 09:32:57,633 - Generating config: /etc/hadoop/conf/mapred-site.xml

2014-10-31 09:32:57,633 - File['/etc/hadoop/conf/mapred-site.xml'] {'owner': 'mapred', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2014-10-31 09:32:57,635 - Changing owner for /etc/hadoop/conf/mapred-site.xml from 514 to mapred

2014-10-31 09:32:57,635 - XmlConfig['capacity-scheduler.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/etc/hadoop/conf', 'configuration_attributes': ..., 'configurations': ...}

2014-10-31 09:32:57,647 - Generating config: /etc/hadoop/conf/capacity-scheduler.xml

2014-10-31 09:32:57,647 - File['/etc/hadoop/conf/capacity-scheduler.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2014-10-31 09:32:57,648 - Changing owner for /etc/hadoop/conf/capacity-scheduler.xml from 514 to hdfs

2014-10-31 09:32:57,649 - File['/var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid'] {'action': ['delete'], 'not_if': 'ls /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid >/dev/null 2>&1 && ps `cat /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid` >/dev/null 2>&1'}

2014-10-31 09:32:57,677 - Execute['ulimit -c unlimited; export HADOOP_LIBEXEC_DIR=/usr/hdp/current/hadoop-client/libexec && /usr/hdp/current/hadoop-yarn-client/sbin/yarn-daemon.sh --config /etc/hadoop/conf start timelineserver'] {'not_if': 'ls /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid >/dev/null 2>&1 && ps `cat /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid` >/dev/null 2>&1', 'user': 'yarn'}

2014-10-31 09:32:58,772 - Execute['ls /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid >/dev/null 2>&1 && ps `cat /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid` >/dev/null 2>&1'] {'initial_wait': 5, 'not_if': 'ls /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid >/dev/null 2>&1 && ps `cat /var/run/hadoop-yarn/yarn/yarn-yarn-timelineserver.pid` >/dev/null 2>&1', 'user': 'yarn'}

2014-10-31 09:33:03,903 - Error while executing command 'start':

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 122, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/services/YARN/package/scripts/application_timeline_server.py", line 42, in start

service('timelineserver', action='start')

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/services/YARN/package/scripts/service.py", line 59, in service

initial_wait=5

LikeLike

The Timeline Server is part of YARN please check

/var/log/hadoop-yarn/on the Timeline Server node for it's error logs.LikeLike

Ambari disables the Application Timeline Server (ATS) automatically as part of the “kerberization”. The ATS does not play well together with Kerberos at this time. You’ll notice that’s mentioned when starting the process, and again on the final page of the wizard (shown above, as the last figure). It is not advisable to try to start it manually.

LikeLike

This does not sound right. Did you create the additional keytab for the YARN RM as mentioned in the post? What is the error message you are seeing?

LikeLike

A bit late, but if this is not working configure a new keytab like ats/_HOST@MYCORP.NET and configure your parameters :

yarn.timeline-service.principal = ats/_HOST@MYCORP.NET

yarn.timeline-service.keytab = /etc/security/keytabs/ats.service.keytab

yarn.timeline-service.http-authentication.type = kerberos

yarn.timeline-service.http-authentication.kerberos.principal = HTTP/_HOST@MYCORP.NET

yarn.timeline-service.http-authentication.kerberos.keytab = /etc/security/keytabs/spnego.service.keytab

This is working for me with HDP2.1.3.

LikeLike

great help !! Thanks Henning for this blog

LikeLike

Great guide. Thanks!

LikeLike